RESEARCH PUBLICATION

Exploring Trust and Transparency in Retrieval-Augmented Generation for Domain Experts

Published at CHI EA '25 (Extended Abstracts of the CHI Conference on Human Factors in Computing Systems)

Investigating how design features in RAG systems can enhance trust and transparency for financial domain experts through user-centered design principles and empirical evaluation.

My Role

- User Experience Research

- User testing

- Interface Prototyping

Research Context

TThis study was conducted as part of my academic research, focusing on the intersection of AI systems and human-computer interaction. The work addresses critical challenges in making AI systems trustworthy for domain experts who possess deep subject knowledge but limited technical familiarity with AI.

The Problem

How might we design RAG systems that build appropriate trust and transparency for domain experts in high-stakes decision-making contexts?

Current RAG systems often lack the transparency features needed for domain experts to understand, verify, and trust AI-generated responses, particularly in critical domains like finance where errors can have severe consequences.

Who is this for?

We focused on financial professionals as our primary user group - domain experts who:

- Have deep financial expertise but limited AI technical knowledge

- Make high-stakes decisions based on information analysis

- Need to verify and trace the sources of AI-generated insights

- Require control over their analytical workflows

Why this matters?

#1 TRUST CALIBRATION

Domain experts need appropriate trust levels in AI systems - neither over-reliance nor under-utilization. Our research found that transparency features significantly impact trust formation.

#2 TRANSPARENCY & VERIFICATION

Financial professionals require the ability to trace AI responses back to source documents to verify accuracy and understand reasoning, essential for regulatory compliance and decision confidence.

#3 USER AGENCY & CONTROL

Experts want control over which sources to trust, how to explore information, and how to customize the system to their workflow preferences.

RESEARCH QUESTION

How do confidence levels, source attribution, and text highlighting impact user trust and understanding in RAG systems for financial analysis?

Development Process

RESEARCH METHODOLOGY

Our study employed a mixed-methods approach combining quantitative trust measurements with qualitative user preference analysis through a controlled user study with 50 financial professionals.

SYSTEM FEATURES EVALUATED

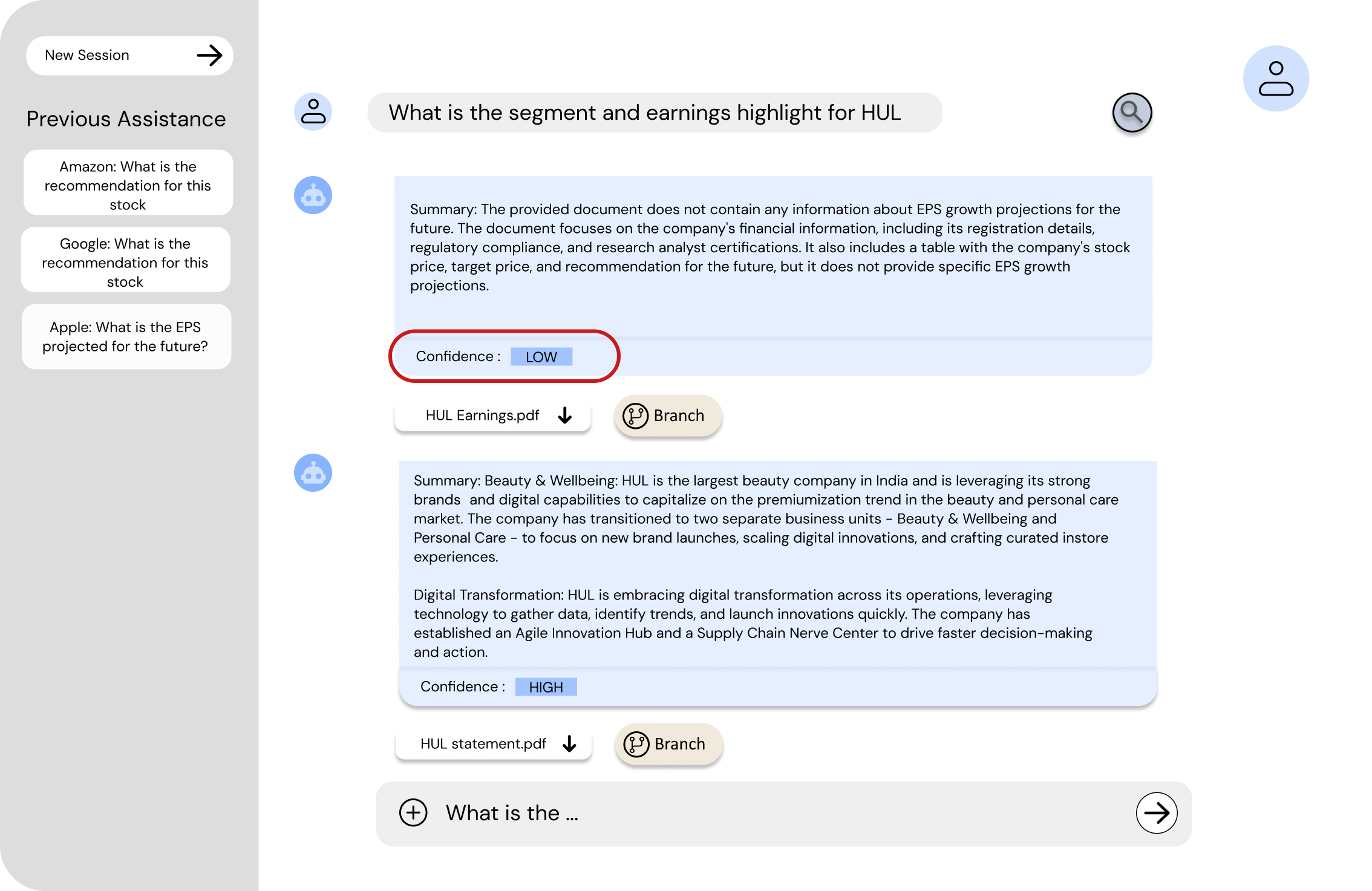

Source Attribution & Confidence Levels

- Each response includes confidence levels (LOW, MEDIUM, HIGH)

- Source-specific answers rather than synthesized responses

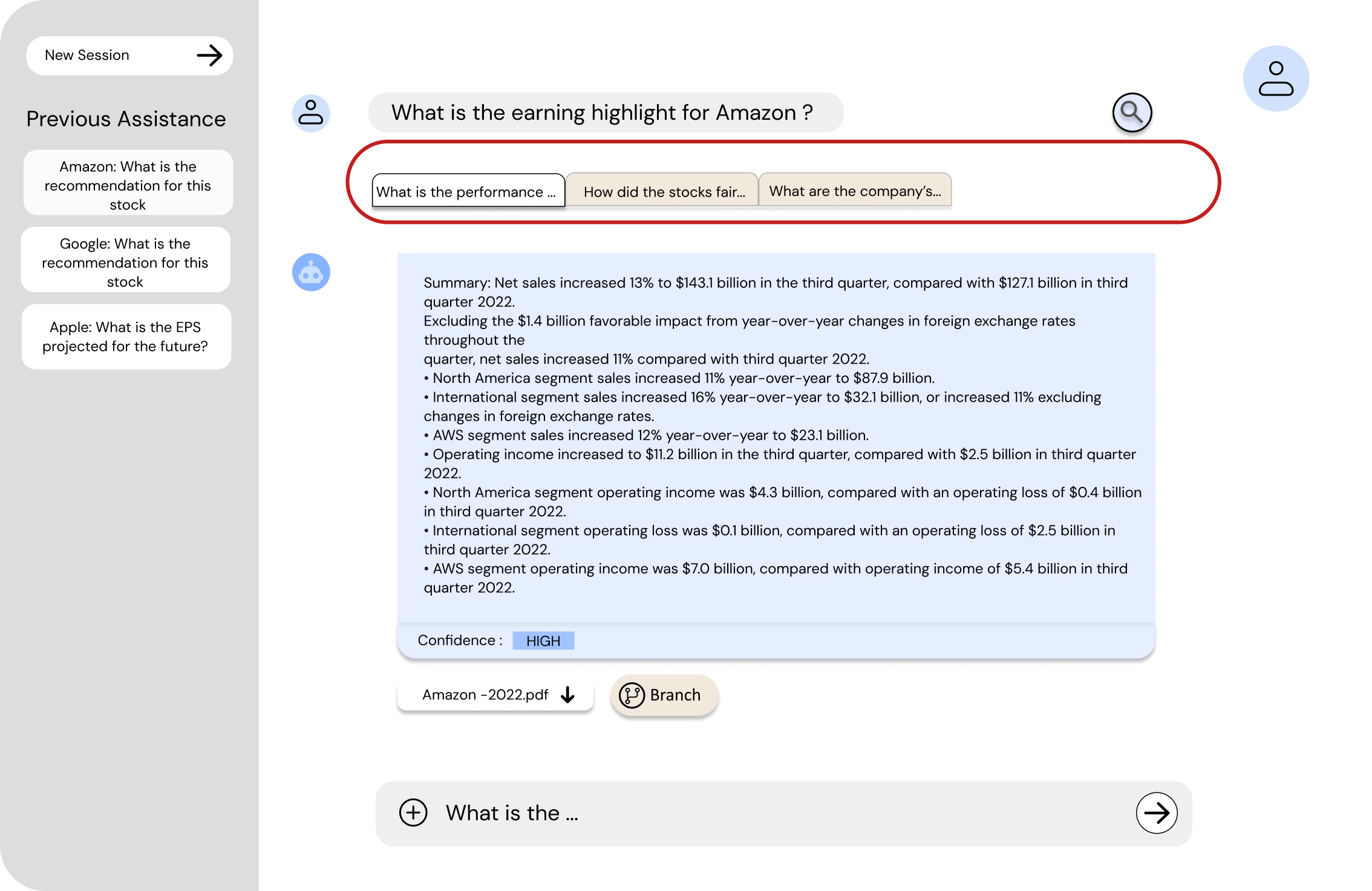

- Meta-summaries highlighting consensus and divergence across sources

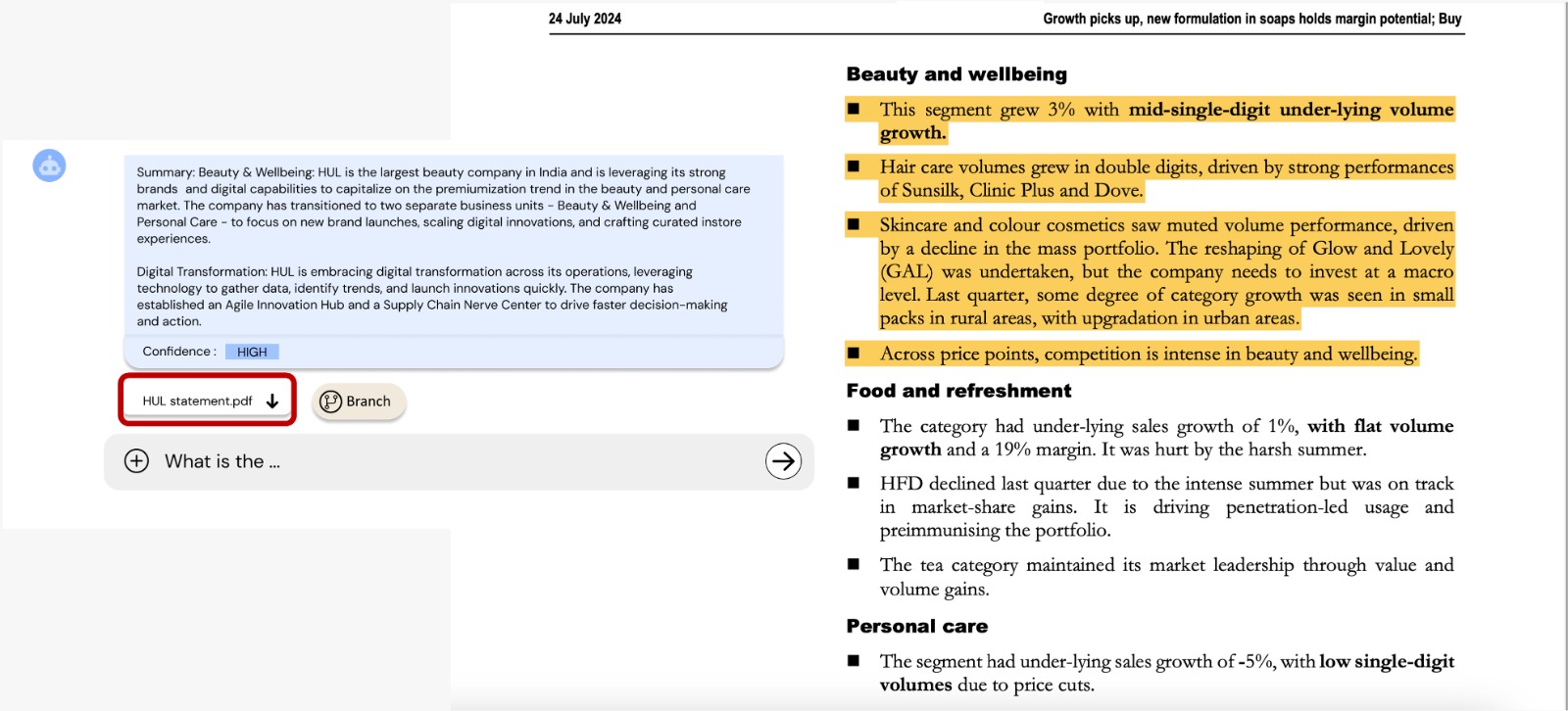

Transparency Through Highlighted Text

- Specific document sections used for generation are highlighted

- Direct links to source PDFs with visual highlighting

- Enables traceability and intelligibility of system reasoning

User Control Through Branching

- Multiple inquiry streams management

- Source-specific questioning capabilities

- Parallel exploration of different reports or topics

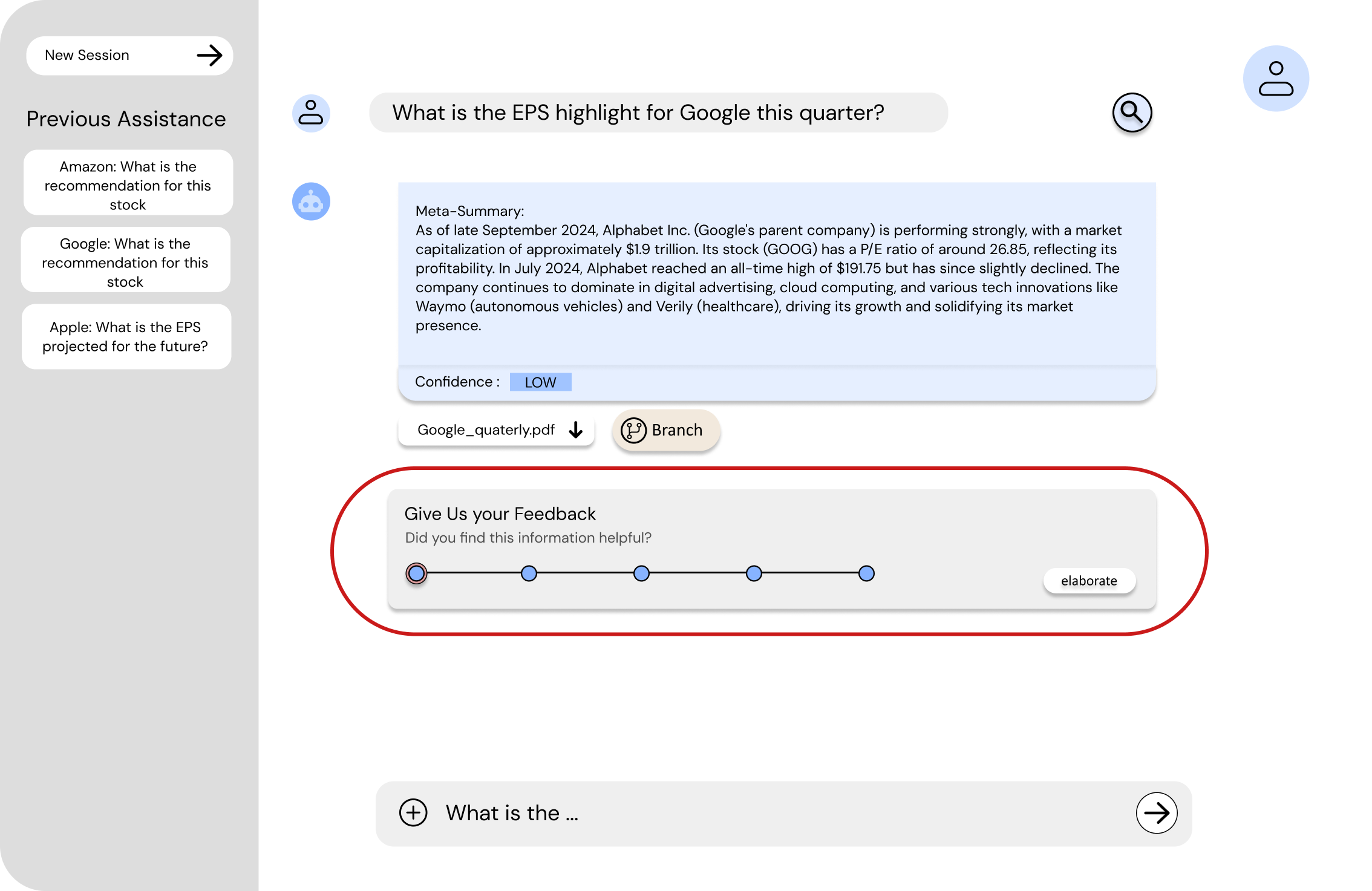

Feedback Mechanisms

- Categorical and qualitative feedback options

- Human-machine collaboration support

- System improvement through user input

RESEARCH FINDINGS

KEY INSIGHTS FROM USER STUDY

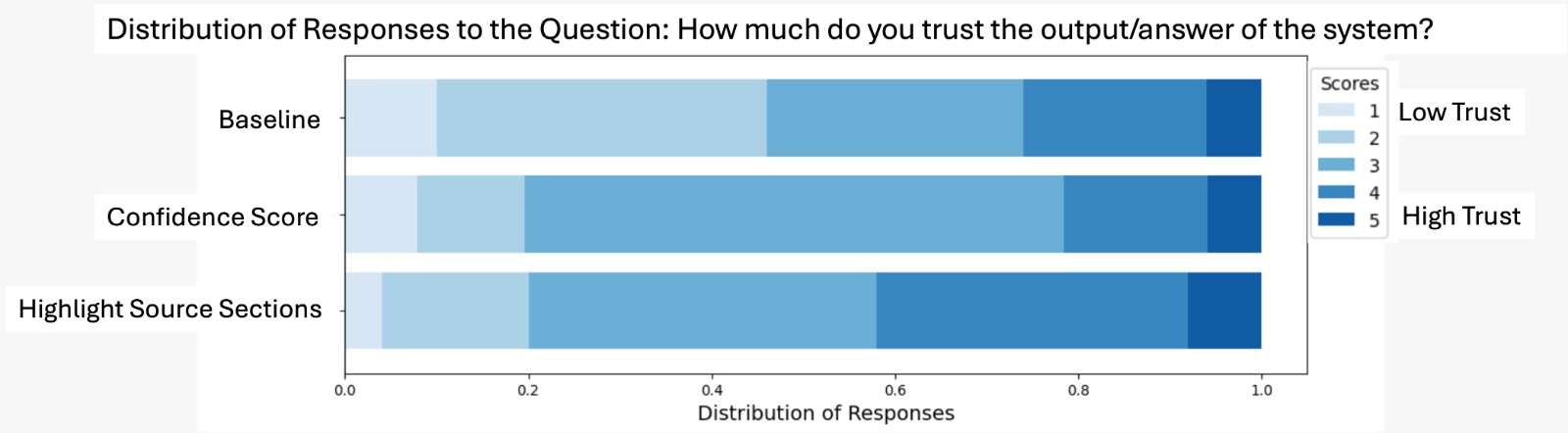

#1 IMPACT ON TRUST

- Confidence levels alone did not significantly increase trust (p = 0.080)

- Text highlighting + confidence levels significantly increased trust (p = 0.0036)

- Transparency features more impactful than confidence indicators alone

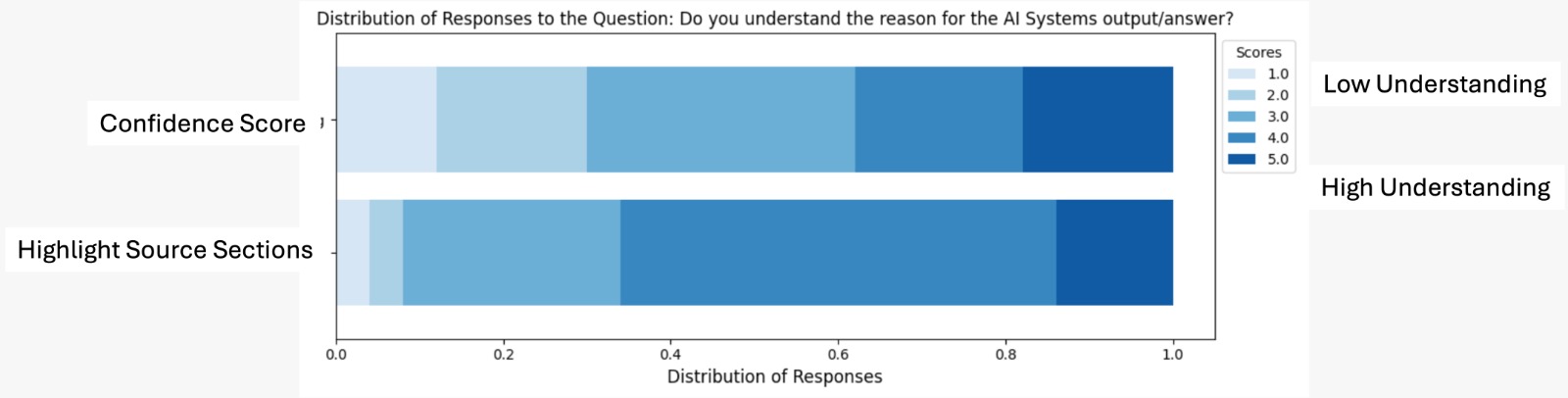

#2 IMPACT ON UNDERSTANDING

- Text highlighting significantly improved understanding (p = 0.0021)

- Users could better trace reasoning with highlighted source sections

- Visual transparency more effective than numerical confidence scores

#3 USER CONTROL PREFERENCE

Four key themes emerged from qualitative analysis:

-

Control Over Sources (15/50 participants)

- Desire to shortlist specific trusted reports

- Preference for certain brokerage sources

- Need to include additional document types

-

Personalization & Adaptability (8/50 participants)

- Strong preferences for specific analysts

- Balance between personalization and avoiding bias

- System customization capabilities

-

Branching & Iteration (27/50 participants)

- High value in branching off specific reports

- Iterative questioning for deeper understanding

- Greater analytical control

-

Feedback Mechanisms (26/50 participants)

- Transparency in how feedback is used

- System improvement through user input

- Historical data evaluation capabilities

DESIGN IMPLICATIONS

Our findings reveal that visual transparency features, particularly source highlighting, are more effective than confidence scores alone in building user trust and understanding in RAG systems. The study demonstrated that while numerical confidence indicators provided useful context, they did not significantly impact trust levels, whereas text highlighting that shows exactly which document sections informed AI responses significantly improved both trust and comprehension.

Domain experts require user control mechanisms such as source filtering and branching capabilities to maintain agency over their decision-making processes, with 27 out of 50 participants finding branching functionality particularly valuable for investigating specific reports in depth.

Additionally, verification workflows become essential in high-stakes contexts where professionals must trace AI-generated insights back to original sources for accountability and regulatory compliance, enabling them to validate recommendations before making critical financial decisions.

RESEARCH IMPACT

Academic Contributions:

- Novel user-centered approach to RAG system design for domain experts

- Empirical evidence on the relationship between transparency features and trust

- Actionable design guidelines for trustworthy AI systems in high-stakes domains

Practical Applications:

- Framework applicable to other expert domains (legal, healthcare, consulting)

- Design patterns for transparency in AI-assisted decision making

- User study methodology for evaluating trust in AI systems

TECHNICAL APPROACH

System Architecture:

- Retrieval-Augmented Generation with vector database storage

- Confidence scoring based on relevance and factuality metrics

- Document highlighting through semantic similarity matching

- Multi-source response generation with source attribution

Evaluation Methodology:

- Pre/post trust surveys with Likert scales

- Controlled task-based user study design

- Statistical analysis using Wilcoxon signed-rank tests

- Qualitative thematic analysis of user preferences

REFLECTION

This research project taught me the importance of human-centered design in AI systems. While technical improvements in RAG systems are important, user trust and understanding are equally critical for successful adoption.

- Users need agency, not just accurate responses

- Transparency must be actionable, not just informative

- Domain expertise shapes how users interact with AI explanations

- Trust building requires multiple complementary design features

The study reinforced that successful AI systems for experts require careful balance between system capability and user control, with transparency serving as the bridge between technical functionality and user confidence.

Publication Details

Full Citation: Divya Ravi and Renuka Sindhgatta. 2025. Exploring Trust and Transparency in Retrieval-Augmented Generation for Domain Experts. In Extended Abstracts of the CHI Conference on Human Factors in Computing Systems (CHI EA '25), April 26–May 01, 2025, Yokohama, Japan. ACM, New York, NY, USA, 7 pages.

DOI: https://doi.org/10.1145/3706599.3719985

Conference: CHI EA '25 - Extended Abstracts of the CHI Conference on Human Factors in Computing Systems